DoH服务

- 链接:

- 使用

DoH(Dns Over HTTPS)服务,可以处理一部分网站不可访问的问题。

1 | ~$ apt-get install dnscrypt-proxy -y |

- Open the file

/etc/dnscrypt-proxy/dnscrypt-proxy.tomlin your favorite editor. Find the general section and change theserver_namesvariable.

1 | server_names = ['cloudflare'] |

- And change the

nameserverinside/etc/resolv.conf.1

2

3~$ cat /etc/resolv.conf

# Generated by dhcpcd

nameserver 127.0.0.1

NextCloud

简介

- Nextcloud offers the industry-leading, on-premises content collaboration platform.

Our technology combines the convenience and ease of use of consumer-grade solutions

like Dropbox and Google Drive with the security, privacy and control business needs.

- Nextcloud offers the industry-leading, on-premises content collaboration platform.

链接:

Docker-compose搭建nextcloud+collabora/code(无HTTPS)

目录结构

1

2

3

4

5

6

7

8

9

10

11

12nextcloud$ tree -L 2

.

├── db-data [error opening dir]

├── docker-compose.yml

└── volumes

├── config

├── custom_apps

├── data

├── html

└── theme

7 directories, 1 filedocker-compose.yml

1 | nextcloud$ cat docker-compose.yml |

如果安装

nextcloud是没有开启HTTPS访问的,就无法在http://cloud.domain.im/settings/apps页面里显示可安装的插件列表.这里也可以自己手动从https://apps.nextcloud.com下载插件包,手动解压到nextcloud/html/apps里,再启用它,就可以像是在线安装的那样使用了.并且如上面所示,需要在本机的/etc/hosts内加入一条如:192.168.1.100 cloud.domain.im这样的记录,并且把它挂载到容器中去,这样可以使用域名来测试,用域名来生成安全证书.安装

Collabora Online服务器功能1

2

3

4~$ wget -c https://github.com/nextcloud-releases/richdocuments/releases/download/v5.0.3/richdocuments-v5.0.3.tar.gz

~$ wget -c https://github.com/CollaboraOnline/richdocumentscode/releases/download/21.11.306/richdocumentscode.tar.gz

~$ cd volumes/html/apps

~$ tar xvf *.gz && sudo chown www-data:www-data -R richdocuments richdocumentscode配置

Collabora Online服务器的URL.- 打开

http://cloud.domain.im:8080/settings/admin/richdocuments页,选择Use your own server,这里填入http:://cloud.domain.im:9080后,按Save保存配置,如果上面的状态显示绿色的钩,并且显示Collabora Online server is reachable.表示已经连接到服务器了.

- 打开

现在进去到网盘目录里,如果能打开

docx,odt这样的文件,表示安装成功,Collaboar Online打开

http://cloud.domain.im:9080/browser/dist/admin/admin.html会有服务器相关的管理监控信息.网盘里当前被Collabora Online打开编辑文件,也会显示在Documents open的列表内.

Self-Signed HTTPS版本

整个工程目录结构如下:

1 | nextcloud$ tree -L 2 |

nginx挂载目录结构如下

1 | nextcloud$ tree nginx/ |

nginx全局主配置文件.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48nextcloud$ cat nginx/nginx.conf

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 2048;

multi_accept on;

}

error_log syslog:server=unix:/dev/log,facility=local6,tag=nginx,severity=error;

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

index index.html index.htm;

charset utf-8;

server_tokens off;

autoindex off;

client_max_body_size 512m;

include mime.types;

default_type application/octet-stream;

sendfile on;

sendfile_max_chunk 51200k;

tcp_nopush on;

tcp_nodelay on;

open_file_cache max=1000 inactive=20s;

open_file_cache_valid 30s;

open_file_cache_min_uses 2;

open_file_cache_errors off;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_session_tickets off;

ssl_session_cache shared:SSL:50m;

ssl_session_timeout 10m;

ssl_stapling off;

ssl_stapling_verify off;

resolver 8.8.8.8 8.8.4.4; # replace with `127.0.0.1` if you have a local dns server

ssl_prefer_server_ciphers on;

ssl_dhparam ssl/dhparam.pem; # openssl dhparam -out ssl/dhparam.pem 4096

gzip on;

gzip_disable msie6;

gzip_vary on;

gzip_proxied any;

gzip_types text/plain text/css application/json application/javascript text/xml application/xml application/xml+rss text/javascript;

include conf.d/*.conf;

include sites-enabled/*.conf;

}nextcloud站点代理配置文件

1 | nextcloud$ cat nginx/sites-enabled/nextcloud.conf |

- 这里用一键

openssl脚本,创建自签名的证书.

1 | nextcloud$ cat create_self-sign-tls.sh |

- 一键创建自签名

TLS证书脚本

1 |

|

- 最终

docker-compose的文件

1 | nextcloud$ cat docker-compose.yml |

- 如上面的文件所示,还需要修改

collabora/code内默认的配置文件,这里是先从运行容器中复制一份源文件出来,修改后再用挂载的方式,覆盖容器内的配置.

1 | nextcloud$ docker cp <container ID>:/etc/coolwsd/coolwsd.xml ./collabora/coolwsd.xml |

- 打开

coolwsd.xml,并找到server_name这个节点,设置值为:cloud.domain.im. 具体如下:

1 | nextcloud$ cat collabora/coolwsd.xml |

在测试时发,如果没有上述

coolwsd.xml的修改,就无法使Collabora Online打开文件编辑,日志中报出如下错误.1

wsd-00001-00039 2022-04-22 15:52:33.479492 +0000 [ websrv_poll ] ERR #29 Error while handling poll at 0 in websrv_poll: #29BIO error: 0, rc: -1: error:00000000:lib(0):func(0):reason(0):| net/Socket.cpp:467

因为是使用

nginx做HTTPS代理,还需要对nextcloud/config/config.php修改,加入'overwriteprotocol' => 'https',,重定项跳转.

1 | nextcloud$ sudo cat volumes/config/config.php |

Docker安装

- nextcloud/docker

- 这里是个人使用,所以对数据库没有什么特殊要求,就使用

sqlite,但是安全方面还是要使用HTTPS,使用docker安装.

1 | ~$ docker images | grep "nextcloud" || docker pull nextcloud |

- 下面是

docker安装运行sqlite数据库,通过cloudflare tunnel对外提供访问,没有配置nginx之类的代理。

1 | <?php |

- 需要配置的参数有以下个:

- trusted_domains:

- overwrite.cli.url: https://mycloud.example.com/

- overwritehost: ‘’,

- overwriteprotocol: ‘https’, 因为

tunnel对外提供的访问是https.

- trusted_domains:

Dokku安装

安装Plugins

1 | ~$ sudo dokku plugin:install https://github.com/dokku/dokku-letsencrypt.git |

安装

1 | ~$ dokku apps:create mycloud |

链接数据库,运行下面操作后,打开浏览器,进入首次配置管理员与数据库类型(Storage & database)的配置,默认是

SQLite.1

2~$ dokku postgres:create mycloud_db

~$ dokku postgres:link mycloud_db mycloud设置域名,域名是主要是为了使用

Let's Encrypt,还有就是SNI的功能,这里没有注册域名,使用了dynv6.net的动态域名.1

2

3

4~$ dokku domains:add mycloud nc.llccyy.dynv6.net

~$ dokku domains:remove mycloud mycloud.localhost

~$ dokku config:set mycloud --no-restart DOKKU_LETSENCRYPT_EMAIL=yjdwbj@gmail.com

~$ dokku letsencrypt mycloud获取证书错误:

1 | darkhttpd/1.12, copyright (c) 2003-2016 Emil Mikulic. |

- 出现上面的问题,基本就是

DNS A记录的问题,不能解析域名,也有可能是域名服务商的问题,在使用dynv6.com的服务是有碰到这种问题,有时不作任改动,过一段时间重试又可以了.也可以尝试使用其它的DDNS. - 在

dokku中使用Let's Encrypt获取证书,有几点要求注意的点:- 必须要有一个域名(有A或AAAA记录),二级动态域名也可.

DOKKU_DOCKERFILE_PORTS: 80/tcp,必须是80端口.如:nextcloud:latest是80/tcp,nextcloud:nextcloud:fpm-alpine它是9000/tcp,而且nextcloud:fpm-alpine only a php fpm instance without a web server.所以,使用nextcloud:latest创建的应用是可以正确的获取证书.- 如果

DOKKU_DOCKERFILE_PORTS不是80端口,可以使用dokku proxy:ports-set <APP> http:80:9000先设置要代理,再申请获取证书.

- 如果是从

Docker Images去创建的app,也就是用dokku tags:deploy <appname> latest部署的,可以使用下面docker命令查看它所支持的资源

与Exposed的详情.

1 | ~$ docker inspect <appname>.web.1 | jq ".[0].Config.ExposedPorts" |

- 或者直接查看镜像的配置详情

1

~$ docker image inspect <image tag> | jq '.[0].Config.ExposedPorts'

- 设置上传文件限制

- nginx configuration

1 | ~$ dokku nginx:set mycloud client-max-body-size 100m |

影音视频

jellyfin媒体中心

1 | ~$ docker pull jellyfin/jellyfin |

KODI(XMBC)

kodi非常强支持非常的多的系统平台,如果是小米电视2,因为它是深度定制的

android 4.3,所以最高版本只能安装kodi-16.1-Jarvis-armeabi-v7a.apk,这版本,很多内置的Add-ons已经失效,且有很多的插件也是失效,比如:jellyfin-kodi的插件就是无法使用,但是用它与minidlna配合还是很好的,只少比SMBFS的体验要好。

本地收藏夹服务

PDF转文本OCR

poppler-utils

- 下面是以一个日文说明书为例,

pdftotext无法转码,显示乱码,pdftohtml显示版权问题。

1 | ~$ sudo apt-get install poppler-utils |

ocrmypdf

ocrmypdf也是不能转换加密后的PDF

1 | ~$ sudo apt-get install ocrmypdf |

Tesseract

1 | ~$ dpkg -l | grep "tesseract" |

Tesseract不支持对PDF文件进行识别1

2

3

4

5

6~$ tesseract ~/Downloads/SDFA.pdf ttt --dpi 150

Tesseract Open Source OCR Engine v4.1.1 with Leptonica

Error in pixReadStream: Pdf reading is not supported

Error in pixRead: pix not read

Error during processing.先把它转成一张张

png,

1 | ~$ pdftoppm -png ~/Downloads/SDFA.pdf turing |

安装目标语言包

1

2

3

4

5

6

7

8

9~$ tesseract --list-langs

List of available languages (5):

chi_sim

cym

eng

jpn

osd

~$ tesseract turing-2.png turing -l jpn --dpi 150输出

turing.txt的文本文件。

Frog

- TenderOwl/Frog/Extract text from any image, video, QR Code and etc.

离线wiki

Kiwix

源码编译

1 | ~$ sudo apt-get install libxapian-dev libpugixml-dev |

-

1

~$ git clone https://github.com/kainjow/Mustache

先安装

libkiwix

1 | ~$ git clone https://github.com/kiwix/libkiwix |

- [openzim/libzim](git clone https://github.com/openzim/libzim)

1 | ~$ git clone https://github.com/openzim/libzim |

1 | ~$ git clone https://github.com/kiwix/kiwix-desktop |

- 直接编译成

deb安装包

1 | ~$ cat > rules.patch <<EOF |

搭建Matrix服务

使用

docker-compose搭建一个本地matrix服务做测试

1 | ~$ mkdir matrix |

如果只是测试,或者

VPS资源有限,使用sqlite3就可以,注释掉postgres的一项。docker-compose.yaml

1 | version: '3.8' |

- 下载

element的模版本配置文件,并且删除"default_server_name": "matrix.org"这一行。

1 | matrix$ wget https://develop.element.io/config.json |

生成Synapse配置文件

1 | matrix$ mkdir synapse |

Synapse默认是使用sqlite3的,如果是要使用postgres,需要把synapse/homeserver.yaml里的database设置如下:

1 | database: |

- 创建新的用户

1 | matrix$ docker-compose up -d |

caddy V2代理

1 | $ apt install -y debian-keyring debian-archive-keyring apt-transport-https |

1 | matrix$ cat Caddyfile |

- 加入到系统的配置运行

1 | matrix$ caddy adapt --config /path/path/Caddyfile |

- 注意:

Caddyfile内如果只写<domain.com> {}的格式,它就会自动转换http -> https,这对于内网测试,本地浏览器测试会出现Mixed content blocked这样的错误.

移动APP的连接

- 进入网页端的管理后台

https://<domain>/settings/user/security. 在Devices & sessions下面点击Create new app password生成一个动态的用户与密码,再点Show QR code from mobile apps,打开移动端nextcloud选择连接自建服务器,扫描连接. - 如果碰到问题,可以通过查看

https://<domain>/settings/admin/logging.

MinIO

MinIO是在GNU Affero通用公共许可证v3.0下发布的高性能对象存储.它是与Amazon S3云存储服务兼容的API.使用MinIO为机器学习、分析和应用

程序数据工作负载构建高性能基础架构.

容器运行

1 | ~$ sudo apt-get install podman -y |

如果出现下面的错误,请确认

/etc/containers/registries.conf里有这一行unqualified-search-registries=["docker.io"],再重试.1

Error: error getting default registries to try: short-name "minio/minio" did not resolve to an alias and no unqualified-search registries are defined in "/etc/containers/registries.conf"

也可以

docker运行1

2

3

4

5~$ docker run -it --rm -p 9000:9000 \

-v `pwd`/minio-data:/data \

-e MINIO_ROOT_USER=minio \

-e MINIO_ROOT_PASSWORD=minio123 \

-p 9001:9001 minio/minio server /data --console-address ":9001"

直接单机运行

1 | ~$ wget http://dl.minio.org.cn/server/minio/release/darwin-amd64/minio |

客户端访问

mc

1 | ~$ ./mc alias set myminio http://127.0.0.1:9000 minio minio123 |

添加用户

1

2

3

4

5

6

7~$ ./mc admin user add myminio testuser testpwd123

Added user `testuser` successfully.

~$ ./mc admin user info myminio testuser

AccessKey: testuser

Status: enabled

PolicyName:

MemberOf:添加桶(bucket)

1

2./mc mb myminio/test-new-s3-bucket

Bucket created successfully `myminio/test-new-s3-bucket`.创建一个

bucket policy

1 | ~$ cat test-policy.json |

把策略文件加入到服务器中

1

2

3~$ ./mc admin policy add myminio test-policy test-policy.json

Added policy `test-policy` successfully.应用策略到指定的用户上

1

2

3

4

5

6

7

8~$ ./mc admin policy set myminio "test-policy" user=testuser

Policy `test-policy` is set on user `testuser`

~$ ./mc admin user info myminio testuser

AccessKey: testuser

Status: enabled

PolicyName: test-policy

MemberOf:

awscli

1 | ~$ pip3 install awscli |

1 | ~$ aws configure --profile minio |

创建一个

bucket.1

~$ aws --profile minio --endpoint-url http://127.0.0.1:9000 s3 mb s3://new-s3-bucket

列出服务器上的

bucket.1

2

3~$ aws --profile minio --endpoint-url http://127.0.0.1:9000 s3 ls

2021-12-13 23:16:20 new-s3-bucket

2021-12-13 22:58:15 test-new-s3-bucket上传一个文件

1

2~$ aws --profile minio --endpoint-url http://127.0.0.1:9000 s3 cp test-policy.json s3://new-s3-bucket

upload: ./test-policy.json to s3://new-s3-bucket/test-policy.json再对比一下,绑定到

minio容器的本地目录minio-data的结构.

1 | minio$ tree minio-data/ |

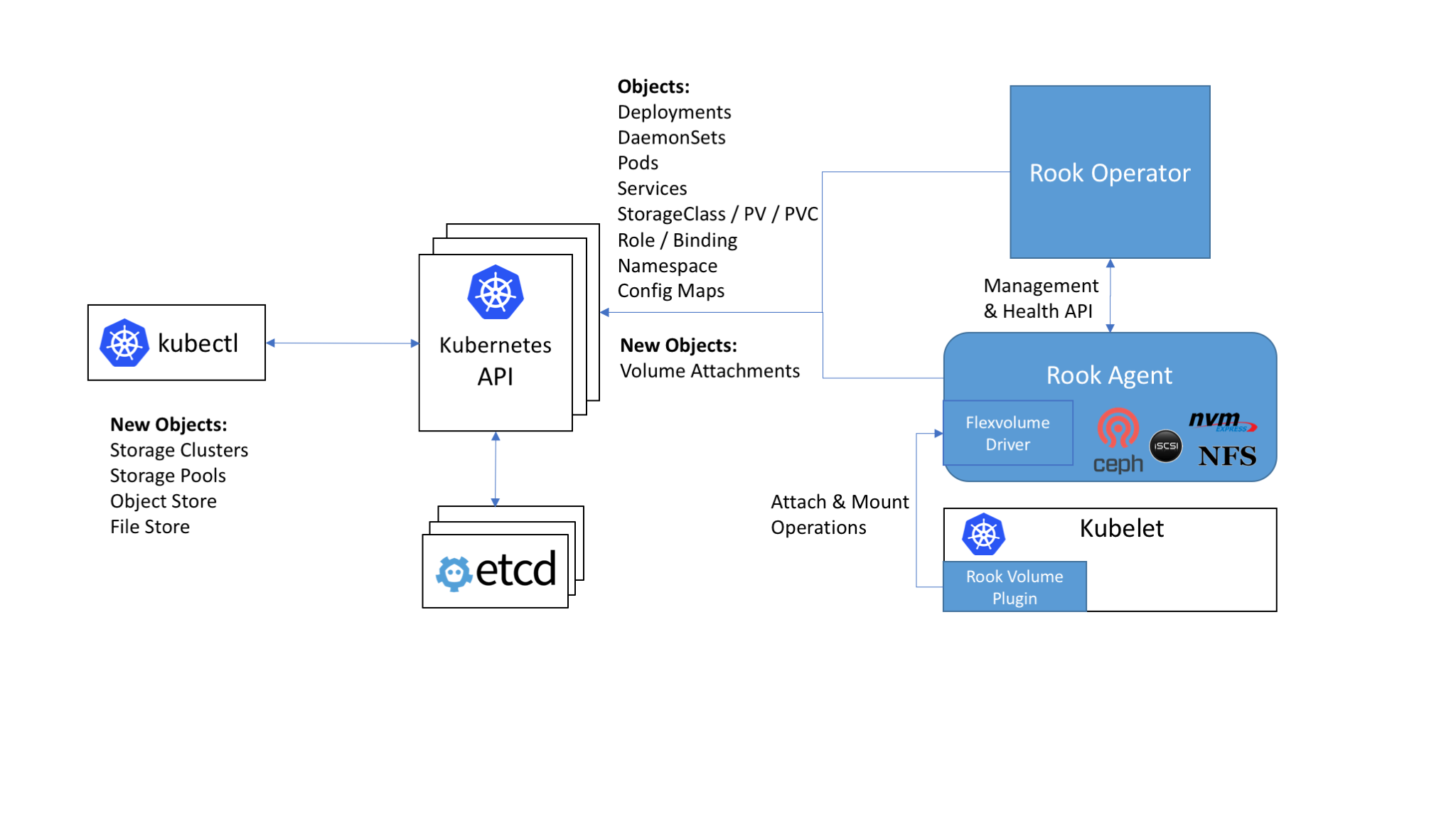

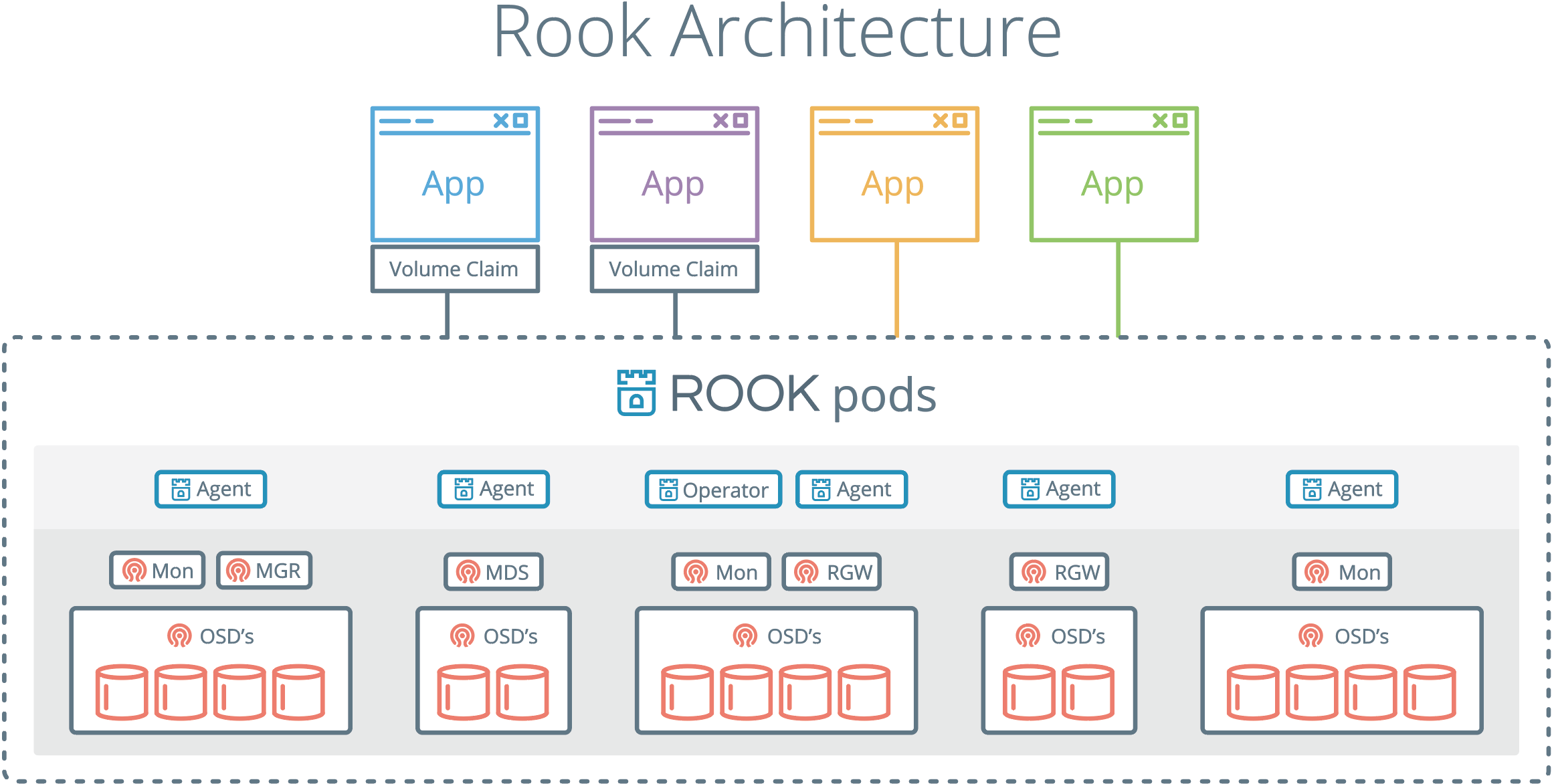

Kubernetes环境中,使用MinIO Kubernetes Operator来创建/配置/管理k8s的MinIO集群.

Syncthing

1 | ~$ docker pull syncthing/syncthing |

使用docker-compose创建

这里使用

docker-compose创建,并支持Traefik反向代理,暴露给外部访问,这里只是本地内部测试,未配置https与真实的域名.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18syncthing$ cat .env

# Syncthing

DOCKER_SYNCTHING_IMAGE_NAME=syncthing/syncthing

DOCKER_SYNCTHING_HOSTNAME=syncthing-on-storage

DOCKER_SYNCTHING_DOMAIN=syncthing.localhost

# discosrv

DOCKER_DISCOSRV_IMAGE_NAME=syncthing/discosrv

DOCKER_DISCOSRV_HOSTNAME=discosrv-on-storage

DOCKER_DISCOSRV_DOMAIN=discosrv.localhost

# exporter

DOCKER_EXPORTER_IMAGE_NAME=soulteary/syncthing-exporter

# xxd -l 16 -p /dev/random | base64

DOCKER_EXPORTER_API_TOKEN=OTU0NGJmMGJhYzRiNGEzM2Q3Yzc4MjhjOTdhZjJkMDAK

DOCKER_EXPORTER_HOSTNAME=syncthing-exporter-on-storage

DOCKER_EXPORTER_DOMAIN=syncthing-exporter.localhostdocker-compose.yml文件,需要当前目录里的.env文件配合.

1 | syncthing$ cat docker-compose.yml |

- 通过浏览器打开

http://127.0.0.1:8384访问控制台,移动端可以安装syncthing客户端进行连接.

源码编译,安装systemd服务运行

1 | ~$ git clone https://github.com/syncthing/syncthing |

使用docker-compose安装mysql+phpadmin

1 | version: '3' |

协作文档

CryptPad

- xwiki-labs/cryptpad

1

2

3

4~$ sudo dokku apps:create cryptpad

~$ docker tag promasu/cryptpad:latest dokku/cryptpad:latest

~$ sudo dokku tags:deploy cryptpad latest

~$ sudo dokku domains:add cryptpad cryptpad.llccyy.dynv6.net

wiki.js

docker-compose安装

1 | wiki.js$ cat docker-compose.yml |

- 如上面如示,

docker-compose.yml是开启了Traefik反向代理的,启动后,打开http://wiki.localhost/就可以设置安装wiki.js向导页了.

配置TLS与域名

1 | cat docker-compose-wikijs.yml |

- 如上面所示,

entrypoints=websecure,tls.certresolver=myresolver这两个指项的定义是在traefik启动命令行中定义的,而且路由规则Rule必须是匹配/wiki与/_assets的前缀.traefix的启动命令大概如下:

1 | [...] |

- 第一次启动访问

wiki页面时,会是一个初始化安装页,在SITE URL必须填写https://<your full domain>/wiki.

LoRaWAN 网关

ChirpStack

系统应用安装方式

需要先安装配置

mqtt,sql,redis等基础软件服务。设置ChirpStack仓库

1 | ~$ sudo apt install apt-transport-https dirmngr |

先安装基础服务

1 | ~$ sudo apt install \ |

- 设置

postgresql,创建如下的库。

1 | ~$ sudo -u postgres psql |

安装ChirpStack Gateway Bridge.

1 | ~$ sudo apt install chirpstack-gateway-bridge |

- 这里以

EU868为例,需要修改/etc/chirpstack-gateway-bridge/chirpstack-gateway-bridge.toml如下配置:

1 | [...] |

- 开机启动服务

1 | # start chirpstack-gateway-bridge |

安装ChirpStack

1 | sudo apt install chirpstack |

这里需要修改

/etc/chirpstack/chirpstack.toml里的[enabled_regions]把不必要的区域参数删除或都注释掉。使用openssl rand -base64 32生成一串随机字符串,替换secret="you-must-replace-this"这一行。并且需要开启后台的依赖服务:

postgres,redis,mosquitto, 还需要设置好各后台服务器登录验证。才能正常启动chirpstack-gateway-bridge与chirpstack服务。最后,需要删改增补对应的

eu868的区域参数,是在/etc/chirpstack/region_eu868.toml文件内.配置不同区域的参数与频道,需要参考这里https://github.com/chirpstack/chirpstack/tree/master/chirpstack/configuration.

设置开机运行服务

1 | sudo systemctl start chirpstack |

- 在浏览器打开本机的ip:

http://chirpstack-ip:8080出现登录界面,默认的用户密码是:admin,admin.

Docker安装方式

使用

Docker安装方式有很多优点,配置一次,可以复制到其它机器上快速大批量部署,并且不会污染到主机的系统环境。这里直接下载https://github.com/chirpstack/chirpstack-docker即可快速部署了。

1 | ~$ git clone https://github.com/chirpstack/chirpstack-docker.git |

- Cloning the device repository, 这里定义一些硬件模本文件。

1 | ~$ git clone https://github.com/brocaar/lorawan-devices /opt/lorawan-devices |

- 运行

docker-compose up后,

1 | ~$ docker-compose up -d |

- 成功运行后,

docker ps如下:

1 | panther@panther-x2:~/chirpstack-docker$ docker ps |

- 需导入上面的硬件列表。

1 | ~$ docker exec chirpstack-docker_chirpstack_1 chirpstack -c /etc/chirpstack import-legacy-lorawan-devices-repository -d /opt/lorawan-devices |

- 导入成功后,会以在

ChirpStack -> Network Server -> Device Profile Templates里看到各种硬件列表。

注册LoraWAN gateway

AI/ML

语音控制

DeepSpeech

- DeepSpeech is an open source embedded (offline, on-device) speech-to-text engine which can run in real time on devices ranging from a Raspberry Pi 4 to high power GPU servers.

MycroftAI/mimic3

- A fast and local neural text to speech system developed by Mycroft for the Mark II.

(MycroftAI/mycroft-core)[https://github.com/MycroftAI/mycroft-core]

- Mycroft is a hackable open source voice assistant.

通信类

Matrix

服务端

Dendrite

这里主要根据官方的文档,通过

docker快速部署一个服务实践.

1 | ~$ git clone https://github.com/matrix-org/dendrite |

客户端

项目管理

OpenProject

自动化测试

RobotFramework

Trojan

trojan与dokku共用 443端口,4层转发.

1 | ~$ nginx -T |

- 本地端口与上面对应有

5443,7443,6443

1 | netstat -tnlp |

资源聚合

- 100种不错的免费工具和资源

- https://arxiv.org/

- arXiv is a free distribution service and an open-access archive for 1,993,024 scholarly articles in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering and systems science, and economics. Materials on this site are not peer-reviewed by arXiv.

- 计算机工程资料索引

谢谢支持

- 微信二维码: