PaaS概述

PaaS(Platform as a service),平台即服务,指将软件研发的平台(或业务基础平台)作为一种服务,以SaaS的模式提交给用户.PaaS是云计算服务的其中一种模式,云计算是一种按使用量付费的模式的服务,类似一种租赁服务,服务可以是基础设施计算资源(IaaS),平台(PaaS),软件(SaaS).租用IT资源的方式来实现业务需要,如同水力、电力资源一样,计算、存储、网络将成为企业IT运行的一种被使用的资源,无需自己建设,可按需获得.PaaS的实质是将互联网的资源服务化为可编程接口,为第三方开发者提供有商业价值的资源和服务平台.简而言之,IaaS就是卖硬件及计算资源,PaaS就是卖开发、运行环境,SaaS就是卖软件.

| 类型 | 说明 | 比喻 | 例子 |

|---|---|---|---|

| IaaS:Infrastructure-as-a-Service(基础设施即服务) | 提供的服务是计算基础设施 | 地皮,需要自己盖房子 | Amazon EC2(亚马逊弹性云计算),阿里云 |

| PaaS: Platform-as-a-Service(平台即服务) | 提供的服务是软件研发的平台或业务基础平台 | 商品房,需要自己装修 | GAE(谷歌开发者平台),heroku |

| SaaS: Software-as-a-Service(软件即服务) | 提供的服务是运行在云计算基础设施上的应用程序 | 酒店套房,可以直接入住 | 谷歌的 Gmail 邮箱 |

Kubernetes概述

- What is Kubernetes?

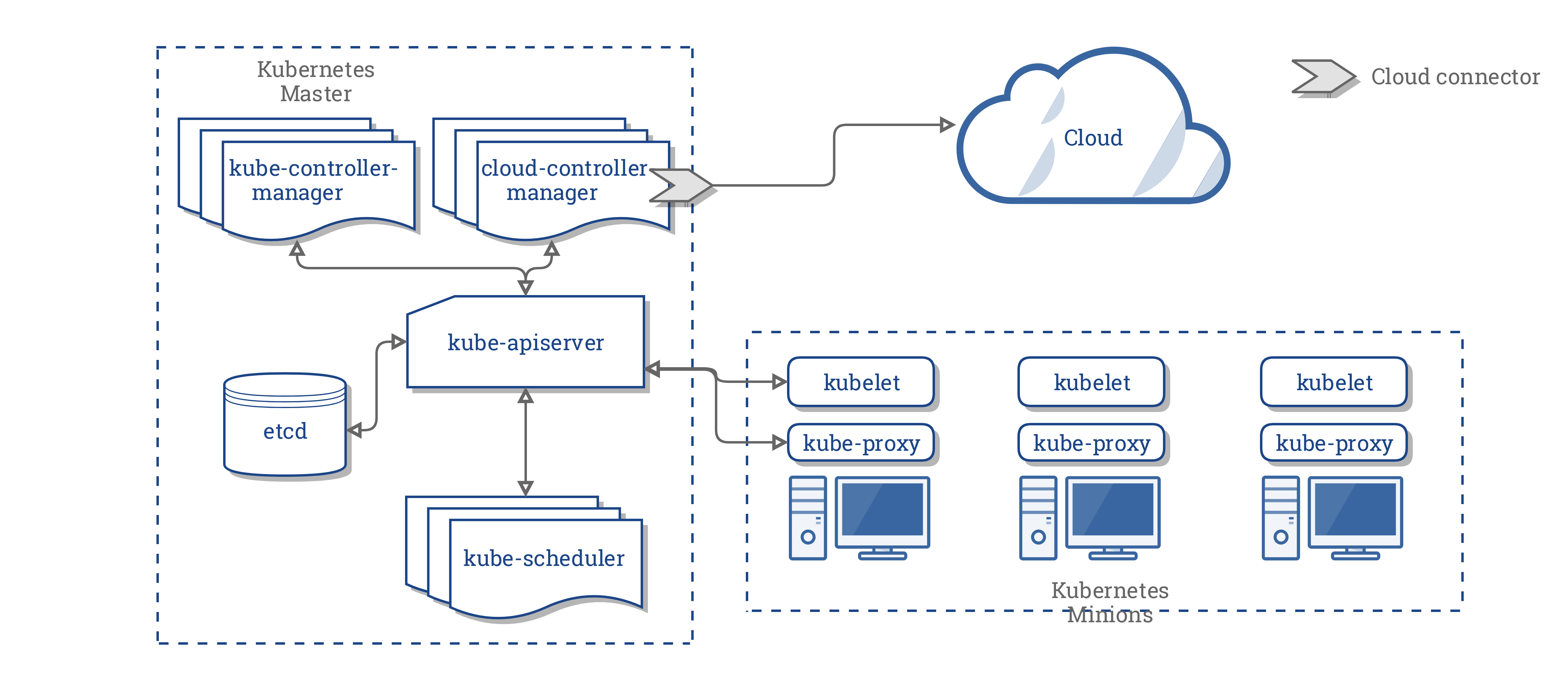

Kubernetes是Google开源的容器集群管理系统.它构建Docker技术之上,为容器化的应用提供资源调度、部署运行、服务发现、扩容缩容等整一套功能,本质上可看作是基于容器技术的Micro-PaaS平台,即第三代PaaS的代表性项目.- Kubernetes架构图

Kubernetes的基本概念

Pod

Pod是若干个相关容器的组合,是一个逻辑概念,Pod包含的容器运行在同一个宿主机上,这些容器使用相同的网络命名空间,IP地址和端口,相互之间能通过localhost来发现和通信,共享一块存储卷空间.在Kubernetes中创建、调度和管理的最小单位是Pod.一个Pod一般只放一个业务容器和一个用于统一网络管理的网络容器.

Replication Controller

Replication Controller是用来控制管理Pod副本(Replica,或者称实例),Replication Controller确保任何时候Kubernetes集群中有指定数量的Pod副本在运行,如果少于指定数量的Pod副本,Replication Controller会启动新的Pod副本,反之会杀死多余的以保证数量不变.另外Replication Controller是弹性伸缩、滚动升级的实现核心.

Deployment

Deployment是一种更加简单的更新RC和Pod的机制.通过在Deployment中描述期望的集群状态,Deployment Controller会将现在的集群状态在一个可控的速度下渐渐更新成期望的集群状态.Deployment的主要的职责同样是为了保证Pod的数量和健康,而且绝大多数的功能与Replication Controller完全一样,因些可以被看作新一代的RC的超集.它的特性有:事件和状态查看,回滚,版本记录,暂停和启动,多种升级方案:Recreate,RollingUpdate.

Job

- 从程序的运行形态上来区分,我们可以将

Pod分成两类:长期运行服务(jboss,mysql,nginx等)和一次性任务(如并行数据计算,测试).RC创建的Pod是长时运行的服务,而Job创建的Pod的都是一次性任务.

StatefulSet

StatefulSet是在与有状态的应用及分布式系统一起使用的.StatefulSet使用起来相对复杂,当应用具有以下特点时才使用它.- 有唯一的稳定网络标识符需求.

- 有稳定性,持久化数据存储需求.

- 有序的部署和扩展需求.

- 有序的删除和终止需求.

- 有序的自动滚动更新需求.

Service

- Kubernetes Service文档

Service是真实应用服务的抽象,定义了Pod的逻辑集合和访问这个Pod集合的策略,Service将代理Pod对外表现为一个单一访问接口,外部不需要了解后端Pod如何运行,这给扩展或维护带来很大的好处,提供了一套简化的服务代理和发现机制.Service共有四种类型:ClusterIP: 通过集群内部的IP地址暴露服务,此地址仅在集群内部可达,而无法被集群外部的客户端访问,此为默认的Service类型.NodePort: 这种类型建立在ClusterIP类型之止,其在每个节点上的IP地址的某静态端口(NodePort)暴露服务,因此,它依然会为Service分配集群IP地址,并将此作为NodePort的路由目标.LoadBalancer: 这种类型建构在NodePort类型之上,其通过cloud provider提供的负载均衡器将服务暴露到集群外部.因此LoadBalancer一样具有NodePort和ClusterIP.(目前只有云服务商才可支持,如果是用VirtualBox做实验,只能是ClusterIP或NodePort)ExternalName: 其通过将Service映射到由externalName字段的内容指定的主机名来暴露服务,此主机名需要被DNS服务解析到CNAME类型的记录.

Ingress

Ingrees资源,它实现的是”HTTP(S)负载均衡器“,它是k8s API的标准资源类型之一,它其实就是基于DNS名称或URL路径把请求转发到指定的Service资源的规则,用于将集群外部的请求流量转发到集群内部完成服务发布,Ingress资源自身并不能进行流量穿透,它仅是一组路由规则的集合. 不同于Deployment控制器等,Ingress控制器并不直接运行为kube-controller-manager的一部分,它是k8s集群的一个重要附件,类似于CoreDNS,需要在集群上单独部署.

Label

Label是用于区分Pod、Service、Replication Controller的Key/Value键值对,实际上Kubernetes中的任意API对象都可以通过Label进行标识.每个API对象可以有多个Label,但是每个Label的Key只能对应一个Value.Label是Service和Replication Controller运行的基础,它们都通过Label来关联Pod,相比于强绑定模型,这是一种非常好的松耦合关系.

Node

Kubernets属于主从的分布式集群架构,Kubernets Node(简称为Node,早期版本叫做Minion)运行并管理容器.Node作为Kubernetes的操作单元,将用来分配给Pod(或者说容器)进行绑定,Pod最终运行在Node上,Node可以认为是Pod的宿主机.

Kubernetes架构

Master节点

Master是k8s集群的大脑,运行服务有:- kube-apiserver

- kube-scheduler

- kube-controller-manager

- etcd,Pod 网络(如 Flannel,Canal)

API Server(kube-apiserver)

Api Server是k8s集群的前端接口,各种工具可以通过它管理Cluster的各种资源.

Scheduler(kube-scheduler)

Scheduler负责决定将Pod放在那个Node上运行.它调试时会充分考虑Cluster的拓扑结构,找到一个最优方案.

Controller Manager(kube-controller-manager)

Controller Manager负责管理Cluster各种资源.保证资源的处于预期的状态.它是由多种controller组成的.

etcd

etcd负责保存k8s的配置信息和各种资源的状态信息.当数据发生变化时,etcd会快速地通知k8s相关组件.

Pod网络

-Pod要能够相互通信,k8s必须部署Pod网络,flannel 是其中一个可选的方案.

Node节点

Node是Pod运行的地方,k8s支持Docker,rkt等容器的Runtime.它上面运行的组件有kubelet,kube-proxy,Pod网络.

kubelet

kubelet是Node的agent,当Scheduler确定在某个Node上运行Pod后,会将Pod的具体配置信息(image,volume)发给该节点的kubelet.kubelet根据这该信息创建各运行容器,并向master报告状态.

kube-proxy

service在逻辑上代表了后端的多个Pod,外界通过service访问Pod.service 接收到的请求是如何转发到相应的Pod.如有多个副本,kube-proxy会实现负载均衡.

Secret & ConfigMap

Secret可以为Pod提供密码,Token,私钥等敏感数据;对于一些非敏感的数据,比如应用的配置信息,可以使用ConfigMap.

插件

网络相关

- ACI provides integrated container networking and network security with Cisco ACI.

- Calico is a secure L3 networking and network policy provider.

- Canal unites Flannel and Calico, providing networking and network policy.

- Cilium is a L3 network and network policy plugin that can enforce HTTP/API/L7 policies transparently. Both routing and overlay/encapsulation mode are supported.

- CNI-Genie enables Kubernetes to seamlessly connect to a choice of CNI plugins, such as Calico, Canal, Flannel, Romana, or Weave.

- Contiv provides configurable networking (native L3 using BGP, overlay using vxlan, classic L2, and Cisco-SDN/ACI) for various use cases and a rich policy framework. Contiv project is fully open sourced. The installer provides both kubeadm and non-kubeadm based installation options.

- Contrail, based on Tungsten Fabric, is a open source, multi-cloud network virtualization and policy management platform. Contrail and Tungsten Fabric are integrated with orchestration systems such as Kubernetes, OpenShift, OpenStack and Mesos, and provide isolation modes for virtual machines, containers/pods and bare metal workloads.

- Flannel is an overlay network provider that can be used with Kubernetes.

- Knitter is a network solution supporting multiple networking in Kubernetes.

- Multus is a Multi plugin for multiple network support in Kubernetes to support all CNI plugins (e.g. Calico, Cilium, Contiv, Flannel), in addition to SRIOV, DPDK, OVS-DPDK and VPP based workloads in Kubernetes.

- NSX-T Container Plug-in (NCP) provides integration between VMware NSX-T and container orchestrators such as Kubernetes, as well as integration between NSX-T and \ container-based CaaS/PaaS platforms such as Pivotal Container Service (PKS) and OpenShift.

- Nuage is an SDN platform that provides policy-based networking between Kubernetes Pods and non-Kubernetes environments with visibility and security monitoring.

- Romana is a Layer 3 networking solution for

Podnetworks that also supports the NetworkPolicy API. Kubeadm add-on installation details available here. - Weave Net provides networking and network policy, will carry on working on both sides of a network partition, and does not require an external database.

服务发现

- CoreDNS is a flexible, extensible DNS server which can be installed as the in-cluster DNS for pods.

可视化面板

- Dashboard is a dashboard web interface for Kubernetes.

- Weave Scope is a tool for graphically visualizing your containers, pods, services etc. Use it in conjunction with a Weave Cloud account or host the UI yourself.

安装Minikube

- 链接:

- Github - minikube

- Github - kubectl

- Running Kubernetes Locally via Minikube

- Creating a single master cluster with kubeadm

- Kubernetes 中文指南/云原生应用架构实践手册

- Hello Kubevirt On Minikube

- How to run Minikube on KVM

- Install k8s Minikube on top of KVM on Debian 9

- Sharing an NFS Persistent Volume (PV) Across Two Pods

- 使用

Minikube是运行Kubernetes集群最简单,最快捷的途路径.Minikube是一个构建单节点集群的工具,对于测试Kubernetes和本地开发应用都非常有用,这里在Debian下安装,它默认会要使用到VirtualBox虚拟机为驱动,也可以安装kvm2为驱动.Minikube的参考文档docker-machine的参数. - 第一次使用

minikube start,它会在创建一个~/.minikube目录,会下载minikube-vxxx.iso到~/.minikube/cache/下面,如果下载十分缓慢,可以手动https://storage.googleapis.com/minikube/iso/minikube-v0.35.0.iso下载复制到目录下.

1 | ~$ curl -Lo minikube https://storage.googleapis.com/minikube/releases/v0.35.0/minikube-linux-amd64 && chmod +x minikube && sudo cp minikube /usr/local/bin/ && rm minikube |

- 启动一个

Minikube虚拟机,如果直接minikube start就会默认使用VirtualBox启动.网络部分使用default.--kvm-network的参数,来源于virsh net-list.minikube-net是一个隔离的网络,也不是说不能联网的,如果需要连网,要与本机的网卡或者网络做桥接或NAT. k8s.gcr.io在国内是无法直接访问的,所以会造成minikube无法拖取相关的镜像,最终导致minikube无法正常使用.在此有几个方法可以变通一下,最简单的方法是使用代理:1

--docker-env HTTP_PROXY=<ip:port> --docker-env HTTPS_PROXY=<ip:port> --docker-env NO_PROXY=127.0.0.1,localhost`

- 如果服务器可以访问外网,则可在

docker daemon的启动参数(/etc/sysconfig/docker)中OPTIONS

加上--insecure-registry k8s.gcr.io

1 | ~$ minikube start --vm-driver=kvm2 --kvm-network minikube-net --registry-mirror=https://registry.docker-cn.com --kubernetes-version v1.14.0 |

经过测试直接使用

kubeadm也可以直接拉取其它的国内镜像进行安装,使用代理也可以安装,考虑到找到一个稳定可靠的代理还是有一些难度.因为minikube start --vm-driver=kvm2是直接在创建一个虚拟机,并通过sudo kubeadm config images pull --config /var/lib/kubeadm.yaml在虚拟机拉取相应的docker镜像组件到本地部署各种k8s的服务.它默认是使用https://k8s.gcr.io/v2/这域名去拉取镜像,1

~$ minikube start --vm-driver=kvm2 --kvm-network minikube-net --registry-mirror=https://registry.docker-cn.com

发现参数

--registry-mirror并没有起作用,还是会报错如下:1

2

3

4Unable to pull images, which may be OK: running cmd: sudo kubeadm config images pull --config /var/lib/kubeadm.yaml: command failed: sudo kubeadm config images pull --config /var/lib/kubeadm.yaml

stdout:

stderr: failed to pull image "k8s.gcr.io/kube-apiserver:v1.14.0": output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)下载

kubectl到本地开发机器(控制端)1

2

3

4

5

6

7

8~$ curl -Lo kubectl https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

~$ chmod +x kubectl && sudo mv kubectl /usr/local/bin

# 加入自动补全功能

~$ echo "source <(kubectl completion bash)" >> ~/.bashrc

~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

minikube Ready <none> 94m v1.13.4

源码编译Minikube

下载最新GO语言编译器

1

2

3~$ wget -c https://golang.google.cn/doc/install?download=go1.12.4.linux-amd64.tar.gz

~$ sudo tar xvf go1.12.4.linux-amd64.tar.gz -C /opt/

~$ export PATH=/opt/go/bin:$PATH下载源码,要先创建

/opt/go/src/k8s.io目录,在该目录下克隆代码.并且修改镜像地址1

2

3

4~$ sudo mkdir /opt/go/src/k8s.io && cd /opt/go/src/k8s.io && git clone https://github.com/kubernetes/minikube.git

~$ cd minikube && for item in `grep -l "k8s.gcr.io" -r *`;do sed -i "s#k8s.gcr.io#registry.cn-hangzhou.aliyuncs.com/google_containers#g" $item ;done

~$ make

~$ sudo cp out/minikube-linux-amd64 /usr/local/bin/minikube综上所述,因为使用

docker tag还是有问题,源码构建的Minikube可以完美解决墙的问题,如果去网上下载第三方的Minikube二进制怕有夹带私货的问题.

错误处理

1 | ⌛ Waiting for pods: apiserver proxy💣 Error restarting cluster: wait: waiting for k8s-app=kube-proxy: timed out waiting for the condition |

手动布署安装Kubernetes组件

- Links:

Debian,Ubuntu发行版安装kubeadm

- kubeadm: the command to bootstrap the cluster.

- kubelet: the component that runs on all of the machines in your cluster and does things like starting pods and containers.

- kubectl: the command line util to talk to your cluster.

- 中文

kubeadm: 用来初始化集群的指令.kubelet: 在集群中的每个节点上用来启动Pod和container等.kubectl: 用来与集群通信的命令行工具.

- 这三个组件的安装时候要注意它们之间的版本兼容性问题.

官方软件仓库,在国内不能使用

1 | ~$ apt-get update && apt-get install -y apt-transport-https curl dirmngr |

阿里云镜像仓库

1 | ~$ apt-get update && apt-get install -y apt-transport-https |

- 查询当前版本需要那些

docker image.

1 | ~$ kubeadm config images list --kubernetes-version v1.14.0 |

安装Master节点

网络模型

- 目前

Kubernetes支持多种网络方案,如:Flannel,Canal,Weave Net,Calico等.它都是实现了CNI的规范.

下面以安装Canal为示例.其它各安装参考这里的 Installing aPodnetwork add-on 部分,中文文档,在kubeadm init是必须要指定一个网络,不然会出现其它问题.根据上述链接指导如:Calico 网络模型:kubeadm init --pod-network-cidr=192.168.0.0/16,它只工在amd64,arm64,ppc64le三个平台.Flannel 网络模型:kubeadm init --pod-network-cidr=10.244.0.0/16,并修改内核参数sysctl net.bridge.bridge-nf-call-iptables=1,工作在Linux的amd64,arm,arm64,ppc64le,s390x

1 | ~$ kubectl apply -f \ |

- 安装

Master节点,注意在国内必须指定--image-repository,默认的k8s.gcr.io是不能直接访问的,还有--kubernetes-version必须与kubelet的组件版本匹配.

1 | ~$ sudo kubeadm init --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.14.0 --pod-network-cidr=10.244.0.0/16 |

- 根据

kubeadm init的参数,安装Flannel网络插件1

2

3

4

5

6

7

8

9

10~$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created - 按照上述安装成功后的提示,配置

Master节点信息.并安装网络插件请参考这里.

1 | ~$ mkdir -p $HOME/.kube |

修改Kubelet的启动参数

kubelet组件是通过systemctl来管理的,因此可以在每个节点里的/etc/systemed/system找到它的配置文件.1

2

3

4

5

6

7

8

9

10

11

12~# cat /etc/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/default/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

安装集群节点

- 在另一个机器里安装上述的三个组件,就可以运行下面命令加入

k8s集群管理了.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16~$ sudo kubeadm join 172.18.127.186:6443 --token z8r97j.3ovdfddb6df9lnq7 --discovery-token-ca-cert-hash sha256:07767a67fa6c38feda7471ee5e1a15a0a9c417cfdf6cf457ff577297f22d9415

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster. - 在 Master 节点上查看集群节点数.

1 | ~$ kubectl get node |

- 查看集群的要完整架构,Master 上也可以运行应用,即 Master 同时也是一个 Node.

1

2

3

4

5

6

7

8

9

10

11

12

13

14~$ kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-d5947d4b-sr5zt 1/1 Running 0 6m3s 10.244.0.6 k8s-master <none> <none>

kube-system coredns-d5947d4b-tznh2 1/1 Running 0 6m3s 10.244.0.5 k8s-master <none> <none>

kube-system etcd-k8s-master 1/1 Running 0 5m11s 172.18.127.186 k8s-master <none> <none>

kube-system kube-apiserver-k8s-master 1/1 Running 0 5m15s 172.18.127.186 k8s-master <none> <none>

kube-system kube-controller-manager-k8s-master 1/1 Running 0 5m13s 172.18.127.186 k8s-master <none> <none>

kube-system kube-flannel-ds-amd64-9d965 1/1 Running 0 5m17s 172.18.127.186 k8s-master <none> <none>

kube-system kube-flannel-ds-amd64-c8dkh 1/1 Running 0 38s 172.18.192.76 dig001 <none> <none>

kube-system kube-flannel-ds-amd64-kswj2 1/1 Running 0 52s 172.18.253.222 fe001 <none> <none>

kube-system kube-proxy-5g9vp 1/1 Running 0 38s 172.18.192.76 dig001 <none> <none>

kube-system kube-proxy-cqzfl 1/1 Running 0 52s 172.18.253.222 fe001 <none> <none>

kube-system kube-proxy-pjbbg 1/1 Running 0 6m3s 172.18.127.186 k8s-master <none> <none>

kube-system kube-scheduler-k8s-master 1/1 Running 0 5m19s 172.18.127.186 k8s-master <none> <none>

- 获取Pod的完整信息

1

2~$ kubectl get pod <podname> --output json # 用JSON格式显示Pod的完整信息

~$ kubectl get pod <podname> --output yaml # 用YAML格式显示Pod的完整信息

拆除k8s集群

1 | ~$ kubectl drain <node name> --delete-local-data --force --ignore-daemonsets |

安装HelloWorld共享Pod数据

1 | apiVersion: v1 # for k8s versions before 1.9.0 use apps/v1beta2 and before 1.8.0 use extensions/v1beta1 |

1 | ~$ kubectl apply -f hello-world.yaml |

通过Github安装Kubernetes

Etcd

1 | ~$ wget -c https://github.com/etcd-io/etcd/releases/download/v3.3.12/etcd-v3.3.12-linux-amd64.tar.gz |

运行

Etcd1

2~$ etcd -name etcd --data-dir /var/lib/etcd -listen-client-urls http://0.0.0.0:2379,http://0.0.0.0:4001 \

-advertise-client-urls http://0.0.0.0:2379,http://0.0.0.0:4001 >> /var/log/etcd.log 2>&1 &查询它的建康状态

1

2~$ etcdctl -C http://127.0.0.1:4001 cluster-healthmember 8e9e05c52164694d is healthy: got healthy result from http://0.0.0.0:2379

cluster is healthy

Kubernetes发布包安装

通过github 下载最新的版本.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17~$ wget -c https://github.com/kubernetes/kubernetes/releases/download/v1.14.0/kubernetes.tar.gz

~$ tar xvf kubernetes.tar.gz && cd kubernetes

~$ tree -L 1

.

├── client

├── cluster

├── docs

├── hack

├── LICENSES

├── README.md

├── server

└── version

$ cat server/README

Server binary tarballs are no longer included in the Kubernetes final tarball.

Run cluster/get-kube-binaries.sh to download client and server binaries.根据上面的

README提示,服务端的组件没有包含在上面的压缩包,而是要通过运行cluster/get-kube-binaries.sh从https://dl.k8s.io/v1.14.0下载.但是dl.k8s.io在国内是不能直接访问的.

安装Minio服务(单节点服务)

链接:

MinIO是一个高性能的Kubernetes下原生的对像存储,它的API同样兼容Amazon S3云存储服务.下面使用kubectl直接按装Minio是参照Deploy MinIO on Kubernetes,下面也可以使用Helm安装它.快速运行单节点服务, 下面运行成功,后可以打开

http://127.0.0.1:9000,使用minioadmin:minioadmin登录到控制台.1

2~$ podman run -p 9000:9000 -p 9001:9001 \

quay.io/minio/minio server /data --console-address ":9001"或者绑定一个本机目录

minio_data到容器内去.1

2~$ podman run -v `pwd`/minio_data:/data -p 9000:9000 -p 9001:9001 \

quay.io/minio/minio server /data --console-address ":9001"

MinIO服务端

创建PV (Persistent Volume)

- 在

Kubernetes环境中,可以使用MinIO Kubernetes Operator - 下面是一个资源描述文件,创建一个10G大小的,本地类型的

PV.PV可以理解成k8s集群中的某个网络存储中对应的一块存储,它与Voleme很类似.PV只能是网络存储,不属于任何的Node,但可以在每个Node上访问它.PV并不是定义在Pod上的,而是独立于Pod之外的定义.PV目前支持的类型包括:- GCEPersistentDisk,

- AWSElasticBlockStore,

- AzureFile,FC(Fibre Channel),

- NFS,

- iSCSI,

- RBD(Rados Block Device),

- CephFS,

- GlusterFS,

- HostPath(仅供单机测试)等等.

1 | ~$ cat pv.yaml |

安装Minio PVC (Persistent Volume Claim)

PVC指定所需要的存储大小,然后k8s会选择满足条件的PV进行绑定,如果PVC创建之后没绑定到PV,就会出现Pending的错误,所以要按照PV->PVC->Deployment->Service这个顺序来试验.PVC的几种状态有:- Available: 空闲状态

- Bound: 已经绑定到某个PVC上.

- Released: 对应的PVC已经删除,但资源还没有被集群收回.

- Failed: PV 自动回收失败.

1 | ~$ kubectl create -f https://github.com/minio/minio/blob/master/docs/orchestration/kubernetes/minio-standalone-pvc.yaml?raw=true |

也可以把上面这个链接的文件下载到本地,修改

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16~$ cat minio-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

# This name uniquely identifies the PVC. This is used in deployment.

name: minio-pv-claim

spec:

# Read more about access modes here: http://kubernetes.io/docs/user-guide/persistent-volumes/#access-modes

storageClassName: standard

accessModes:

# The volume is mounted as read-write by a single node

- ReadWriteOnce

resources:

# This is the request for storage. Should be available in the cluster.

requests:

storage: 10Gi查看系统中的

PVC状态,下面显示状态是Pending,使用describe查看它的详情.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23~$ kubectl get pvc --namespace default

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

minio-pv-claim Pending

~$ kubectl get pvc --namespace default

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

minio-pv-claim Pending 2m22s

lcy@k8s-master:~$ kubectl describe pvc minio-pv-claim

Name: minio-pv-claim

Namespace: default

StorageClass:

Status: Pending

Volume:

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal FailedBinding 4s (x14 over 3m2s) persistentvolume-controller no persistent volumes available for this claim and no storage class is set

Mounted By: minio-6d4d48db87-wxr4d根据上面

Events显示错误如下:1

FailedBinding 4s (x14 over 3m2s) persistentvolume-controller no persistent volumes available for this claim and no storage class is set`

这就是它为什么

Pending的原因.继续下一步,处理这些依赖的错误.

安装Minio Deployment

1 | ~$ kubectl create -f https://github.com/minio/minio/blob/master/docs/orchestration/kubernetes/minio-standalone-deployment.yaml?raw=true |

安装Minio Service

1 | ~$ kubectl create -f https://github.com/minio/minio/blob/master/docs/orchestration/kubernetes/minio-standalone-service.yaml?raw=true |

- 如上所示,

Pending原因是因为Warning FailedScheduling 5m10s (x7 over 12m) default-scheduler 0/3 nodes are available: 3 node(s) had taints that the pod didn\'t tolerate.

1 | ~$ kubectl get pod --namespace default -l app=minio |

通过Ingress暴露服务

- 向集群外部的客户端公开服务的有使用

LoadBalancer和Ingress两种方法.每个LoadBalancer服务都需要自己的负载均衡器,以及独有的公有IP的地址,而Ingress只需要一个公网IP就能为许多服务提供访问.当客户端向Ingress发送Http请求时,Ingress会根据请求的主机名和路径决定请求转发到的服务.Ingress在网络栈的(HTTP)的应用层操作,并且可以提供一些服务不能实现的功能.如基于cookie的session affinity等功能.

Traefik反向代理

- 参考链接:

Traefik是一款云原生反向代理、负载均衡服务,使用golang实现的.和nginx最大的不同是,它支持自动化更新反向代理和负载均衡配置.并且支持多种后端:- Docker / Swarm mode

- Kubernetes

- Marathon

- Rancher (Metadata)

- File

快速安装测试

下载一份

Traefik的配置,可以从这里复制一份简单配置,或者去到https://github.com/traefik/traefik/releases下载一个Traefik的二进制的程序,运行如下命令生成它:1

~$ ./traefik -c traefik.toml

直接

Docker运行测试,浏览器打开http://127.0.0.1:8080/dashboard/#/进入控制台查看.它有几个重要的核心组件:- Providers

- Entrypoints

- Routers

- Services

- Middlewares

1

~$ docker run -d -p 8080:8080 -p 80:80 -v $PWD/traefik.toml:/etc/traefik/traefik.toml traefik

docker-compose测试说明

- 下面是用一个简单的组合配置测试,来直观理解说明

Traefik的用途与使用场景,这里使用v2.5的版本,与旧的v1.2是有差别的.测试的目录结构如下:

1 | ~$ tree |

运行

Traefik实例1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20traefik$ cat docker-compose-v2.yml

version: '3'

services:

reverse-proxy:

# The official v2 Traefik docker image

image: traefik:v2.5

# Enables the web UI and tells Traefik to listen to docker

command: --api.insecure=true --providers.docker

ports:

# The HTTP port

- "80:80"

# The Web UI (enabled by --api.insecure=true)

- "8080:8080"

volumes:

# So that Traefik can listen to the Docker events

- /var/run/docker.sock:/var/run/docker.sock

traefik$ docker-compose -f docker-compose-v2.yml up -d

Creating network "traefik_default" with the default driver

Creating traefik_reverse-proxy_1 ... done运行3个测试实例(traefik/whoami)

1 | whoami-app$ cat docker-compose-v2.yml |

可以通过浏览器的页面

http://127.0.0.1:8080/dashboard/#/http/services查看服务的状态,也可以通过如下命令查看.1

2

3

4

5~$ curl http://localhost:8080/api/rawdata | jq -c '.services'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1829 100 1829 0 0 1786k 0 --:--:-- --:--:-- --:--:-- 1786k

{"api@internal":{"status":"enabled","usedBy":["api@internal"]},"dashboard@internal":{"status":"enabled","usedBy":["dashboard@internal"]},"noop@internal":{"status":"enabled"},"reverse-proxy-traefik@docker":{"loadBalancer":{"servers":[{"url":"http://172.30.0.2:80"}],"passHostHeader":true},"status":"enabled","usedBy":["reverse-proxy-traefik@docker"],"serverStatus":{"http://172.30.0.2:80":"UP"}},"whoami-whoami-app@docker":{"loadBalancer":{"servers":[{"url":"http://172.31.0.4:80"},{"url":"http://172.31.0.2:80"},{"url":"http://172.31.0.3:80"}],"passHostHeader":true},"status":"enabled","usedBy":["whoami@docker"],"serverStatus":{"http://172.31.0.2:80":"UP","http://172.31.0.3:80":"UP","http://172.31.0.4:80":"UP"}}}或者是这样

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16~$ curl -H Host:whoami.docker.localhost http://127.0.0.1

Hostname: 4c9f9a107136

IP: 127.0.0.1

IP: 172.19.0.6

RemoteAddr: 172.19.0.1:46902

GET / HTTP/1.1

Host: whoami.docker.localhost

User-Agent: curl/7.74.0

Accept: */*

Accept-Encoding: gzip

X-Forwarded-For: 172.19.0.1

X-Forwarded-Host: whoami.docker.localhost

X-Forwarded-Port: 80

X-Forwarded-Proto: http

X-Forwarded-Server: 8a986b075043

X-Real-Ip: 172.19.0.1

开启证书与Basic Auth

下面是一个复杂的

docker-compose,支持Let's Crypto自动申请证书。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55version: "3.3"

services:

traefik:

image: "traefik:v2.5"

container_name: "traefik"

command:

- "--api.insecure=false"

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--providers.file.directory=/letsencrypt/"

- "--entrypoints.web.address=:80"

- "--entrypoints.websecure.address=:443"

- "--certificatesresolvers.myresolver.acme.httpchallenge=true"

- "--certificatesresolvers.myresolver.acme.httpchallenge.entrypoint=web"

#- "--certificatesresolvers.myresolver.acme.caserver=https://acme-staging-v02.api.letsencrypt.org/directory"

- "--certificatesresolvers.myresolver.acme.email=<your email>@gmail.com"

- "--certificatesresolvers.myresolver.acme.storage=/letsencrypt/acme.json"

# Global HTTP -> HTTPS

- "--entrypoints.web.http.redirections.entryPoint.to=websecure"

- "--entrypoints.web.http.redirections.entryPoint.scheme=https"

# Enable dashboard

- "--api.dashboard=true"

ports:

- "80:80"

- "443:443"

- "8080:8080"

volumes:

- "./certs:/letsencrypt"

- "/var/run/docker.sock:/var/run/docker.sock:ro"

labels:

- "traefik.enable=true"

- "traefik.http.services.api@internal.loadbalancer.server.port=8080" # required by swarm but not used.

- "traefik.http.routers.traefik.rule=Host(`<Your FQDN domain name>`) && (PathPrefix(`/dashboard`) || PathPrefix(`/api`))"

- "traefik.http.routers.traefik.middlewares=traefik-https-redirect"

- "traefik.http.routers.traefik.entrypoints=websecure"

- "traefik.http.routers.traefik.tls.certresolver=myresolver"

- "traefik.http.routers.traefik.tls=true"

- "traefik.http.routers.traefik.tls.options=default"

- "traefik.http.middlewares.traefik-https-redirect.redirectscheme.scheme=https"

- "traefik.http.routers.traefik.middlewares=traefik-auth"

- "traefik.http.middlewares.traefik-auth.basicauth.users=<login name>:$$apr1$$Pf2MP/Oy$$...."

- "traefik.http.routers.traefik.service=api@internal"

#- 'traefik.http.routers.traefik.middlewares=strip'

#- 'traefik.http.middlewares.strip.stripprefix.prefixes=/dashboard'

whoami:

image: "traefik/whoami"

container_name: "simple-service"

labels:

- "traefik.enable=true"

- "traefik.http.routers.whoami.rule=Host(`<Your FQDN domain name>`) && Path(`/whoami`)"

- "traefik.http.routers.whoami.entrypoints=websecure"

- "traefik.http.routers.whoami.tls.certresolver=myresolver"上面的申请的证书是通过

httpchallenge,也可以通过dns验证的方式, 并且开启了Traefik Dashboard的TLS+Basic Auth认证. 上面的whoami的服务,是用做示例说明,就此说明可以使用Host() && Path(/whoami)方式,去反向代理很多不同的内部服务。如上面所示,使用了本地

./certs:/letsencrypt挂载,所以certs目录内容如下。acme.json是证书相关的内容。

1 | ~$ tree ./certs/ |

创建

tls.yml是为了加强TLS的设置级别,内容如下.可以通过https://www.ssllabs.com去测试当前服务器的TLS安全评分,如果能得到A+说明非常好.1

2

3

4

5

6

7

8

9

10

11

12

13~$ cat certs/tls.yml

tls:

options:

default:

minVersion: "VersionTLS13"

sniStrict: true

cipherSuites:

- TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

- TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

- TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305

- TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305创建

Basic Auth的用户与密码,一般都使用apache-utils工具里的htpasswd命令,也可以使用下面的方式创建,$需要转义成$$.

1 | # -apr1 uses the apr1 algorithm (Apache variant of the BSD algorithm). |

- 或者这样

1 | ~$ printf "<Your User>:$(openssl passwd -apr1 <Your password> | sed -E "s:[\$]:\$\$:g")\n" >> ~/.htpasswd |

docker-compose安装MinIO

运行一个稍微复杂的

MinIO的服务实例1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42version: "3"

services:

minio:

# Please use fixed versions :D

image: minio/minio

hostname: minio

networks:

- traefik_default

volumes:

- $PWD/minio-data:/data

command:

- server

- /data

- --console-address

- ":9001"

expose:

- 9000

- 9001

environment:

- MINIO_ROOT_USER=minio

- MINIO_ROOT_PASSWORD=minio123

- APP_NAME=minio

# Do NOT use MINIO_DOMAIN or MINIO_SERVER_URL with Traefik.

# All Routing is done by Traefik, just tell minio where to redirect to.

- MINIO_BROWSER_REDIRECT_URL=http://minio-console.localhost

labels:

- traefik.enable=true

- traefik.docker.network=traefik_default

- traefik.http.routers.minio.rule=Host(`minio.localhost`)

- traefik.http.routers.minio-console.rule=Host(`minio-console.localhost`)

- traefik.http.routers.minio.service=minio

- traefik.http.services.minio.loadbalancer.server.port=9000

- traefik.http.services.minio-console.loadbalancer.server.port=9001

- traefik.http.routers.minio-console.service=minio-console

networks:

traefik_default:

external: true

minio$ docker-compose up -d

Creating minio_minio_1 ... done运行上面实例后,可以通浏览器打开

http://minio-console.localhost,输入minio:minio123登录到minIO的控制台页面.为什么会通过minio-console.localhost这样一个域名,就可以反向代理内部的服务了.这里可以打开http://localhost:8080/dashboard/#/http/routers看到相应的路由,也可以用下面命令查看有那些路由:1

2

3

4

5

6

7

8

9

10~$ curl http://localhost:8080/api/rawdata | jq '.routers[] | .service'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2509 0 2509 0 0 2450k 0 --:--:-- --:--:-- --:--:-- 2450k

"api@internal"

"dashboard@internal"

"minio-console"

"minio"

"reverse-proxy-traefik"

"whoami-whoami-app"这里测试时需要注意一个点,如果把

minio与traefik等服务描述,写在同一个docker-compose.yml文件里,是不需要

指定networks段的,如果分开写的,是需要声明与traefik的服务在同一个网络域内,上面的测试实例中,docker-compose启动traefik服务时,会默认创建一个名为traefik_default网络域.所以这里在上述

实例中:whoami,minio中,都声明定义了networks的字段,本机Docker网络列表如下:

1 | ~$ docker network ls |

Traefik创建路由规则有多种方式,比如:- 原生

Ingress写法 - 使用

CRD IngressRoute方式 - 使用

GatewayAPI的方式

- 原生

停掉测试实例

1

2

3

4

5

6

7

8whoami-app$ docker-compose -f docker-compose-v2.yml down

Stopping whoami-app_whoami_1 ... done

Stopping whoami-app_whoami_3 ... done

Stopping whoami-app_whoami_2 ... done

Removing whoami-app_whoami_1 ... done

Removing whoami-app_whoami_3 ... done

Removing whoami-app_whoami_2 ... done

Removing network whoami-app_default

MC客户端

MinIO Client Complete Guide Slack

1

2

3wget https://dl.min.io/client/mc/release/linux-amd64/mc

chmod +x mc

./mc --help配置一个S3服务端

1

2

3

4

5

6

7./mc config host add mystorage http://minio.localhost test1234access test1234secret --api s3v4

mc: Configuration written to `/home/michael/.mc/config.json`. Please update your access credentials.

mc: Successfully created `/home/michael/.mc/share`.

mc: Initialized share uploads `/home/michael/.mc/share/uploads.json` file.

mc: Initialized share downloads `/home/michael/.mc/share/downloads.json` file.

Added `mystorage` successfully.查看信息

1

2

3

4

5

6

7

8

9

10~$ ./mc admin info play/

● play.min.io

Uptime: 2 days

Version: 2021-12-10T23:03:39Z

Network: 1/1 OK

Drives: 4/4 OK

11 GiB Used, 392 Buckets, 8,989 Objects

4 drives online, 0 drives offline

S3客户端连接测试MinIO

1 | ~$ pip3 install awscli |

1 | minio$ aws configure --profile minio |

Helm包管理器

- Helm是

k8s的包管理器,它可以类比为Debian,Ubuntu的apt.Red Hat的yum,Python中的pip.Nodejs的npm包管理器.Helm可以理解为Kubernetes的包管理工具,可以方便地发现、共享和使用为Kubernetes构建的应用,它包含几个基本概念:.Chart:一个Helm包,其中包含了运行一个应用所需要的镜像、依赖和资源定义等,还可能包含Kubernetes集群中的服务定义,类似Homebrew中的formula,APT的dpkg或者Yum的rpm文件..Release:在Kubernetes集群上运行的Chart的一个实例.在同一个集群上,一个Chart可以安装很多次.每次安装都会创建一个新的Release.MySQL Chart,如果想在服务器上运行两个数据库,就可以把这个Chart安装两次.每次安装都会生成自己的Release,会有自己的Release名称..Repository:用于发布和存储Chart的仓库.

1 | # 下载一个最版本,把它解压到/usr/local/bin |

安装Tiller服务器

- 利用Helm简化Kubernetes应用部署

1

2

3

4

5

6

7

8

9

10

11

12~$ helm init

Creating /home/lcy/.helm

Creating /home/lcy/.helm/repository

Creating /home/lcy/.helm/repository/cache

Creating /home/lcy/.helm/repository/local

Creating /home/lcy/.helm/plugins

Creating /home/lcy/.helm/starters

Creating /home/lcy/.helm/cache/archive

Creating /home/lcy/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Error: Looks like "https://kubernetes-charts.storage.googleapis.com" is not a valid chart repository or cannot be reached: read tcp 172.18.127.186:54980->216.58.199.16:443: read: connection reset by peer - 如上所示,无法连接到官方的服务器,国内利用阿里云源来安装

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39~$ helm init --upgrade -i registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.13.1 --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

Creating /home/lcy/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /home/lcy/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

~$ helm init --upgrade

$HELM_HOME has been configured at /home/lcy/.helm.

Tiller (the Helm server-side component) has been upgraded to the current version.

Happy Helming!

# 查找charts

~$ helm search

NAME CHART VERSION APP VERSION DESCRIPTION

stable/acs-engine-autoscaler 2.1.3 2.1.1 Scales worker nodes within agent pools

stable/aerospike 0.1.7 v3.14.1.2 A Helm chart for Aerospike in Kubernetes

stable/anchore-engine 0.1.3 0.1.6 Anchore container analysis and policy evaluation engine s...

stable/artifactory 7.0.3 5.8.4 Universal Repository Manager supporting all major packagi...

stable/artifactory-ha 0.1.0 5.8.4 Universal Repository Manager supporting all major packagi...

[...]

# 更新仓库

~$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Skip local chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈ Happy Helming!⎈

# 仓库地址

~$ helm repo list

NAME URL

stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

local http://127.0.0.1:8879/charts

使用Helm Chart部署MinIO

- MinIO中文手册

- 默认

standaline模式下,需要开启Beta API的Kubernetes 1.4+.如果没有出错就会运行成功,如下所示.

如果出错,按照后面的错误处理.accessKey默认access key AKIAIOSFODNN7EXAMPLEsecretKey默认secret key wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

1 | ~$ helm install stable/minio |

- 查看Release对象

1 | ~$ kubectl get service snug-elk-minio-svc |

安装MinIO客户端

- 按照上面安装服务器的

NOTES,安装与配置它的命令行客户端.1

2

3

4

5~$ wget https://dl.minio.io/client/mc/release/linux-amd64/mc

~$ sudo mv mc /usr/local/bin && chmod +x /usr/local/bin/mc

~$ kubectl get svc --namespace default -l app=snug-elk-minio

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

snug-elk-minio-svc LoadBalancer 10.103.48.186 <pending> 9000:32076/TCP 25h

安装集群监控DashBoard

1 | ~$ kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml |

- 查看安装是否成功.

1 | ~$ kubectl --namespace=kube-system get pod -l k8s-app=kubernetes-dashboard |

- 根

kubectl -n kube-system delete $(kubectl -n kube-system get pod -o name | grep dashboard)下面修改它的镜像地址,替换成国内的阿里云的地址. - 使用

kubectl edit deployment/kubernetes-dashboard -n kube-system打开编辑,把k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1替换成registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1,保存退出.

1 | # 修改后,安装成了. |

Proxy加SSH转发访问方式

1 | # k8s的服务器上 |

修改Service端口类型

- 通过

kubectl --namespace=kube-system edit svc kubernetes-dashboard打开编辑,把type: ClusterIP替换成type: NodePort.1

2

3

4~$ kubectl --namespace=kube-system edit svc kubernetes-dashboard

~$ kubectl --namespace=kube-system get svc kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.107.85.75 <none> 443:31690/TCP 65m - 如上所示可以通

https://<service-host>:31690/访问到DashBoard.

访问认证

- Creating sample user

- Access control

- 第一次打开

DashBoard会提示两种登录方式,Kubeconfig与Token.下面参照Creating sample user安装一下.

创建服务帐号

1 | ~$ cat admin-user.yaml |

- 打开DashBoard首页,填入上面的

token值.

创建集群角色绑定

1 | ~$ cat dashboard-admin.yaml |

更新Dashboard

- 安装

Dashboard它不会自动更新复盖本地旧的版本,必须要手动清除的旧的版本再安装新的版本.1

~$ kubectl -n kube-system delete $(kubectl -n kube-system get pod -o name | grep dashboard)

官方快速入门实例教程

Guestbook实例

1 | ~$ git clone https://github.com/kubernetes/examples |

安装Redis Master Pod

1 | ~$ cd examples/guestbook$ && ls *.yaml |

- 注意上面的

k8.gcr.io在国内是不能访问的,所以要修改redis-master-deployment.yaml里的image,

这里通过docker search redis:e2e查询到一些mirror的镜像文件.

1 | ~$ docker search redis:e2e |

安装Redis Master Service

1 | ~$ cat redis-master-service.yaml |

安装Redis Slave Pod

1 | $ cat redis-slave-deployment.yaml |

安装Redis Slave Service

1 | ~$ kubectl apply -f redis-slave-service.yaml |

安装Frontend Pod

1 | ~$ cat frontend-deployment.yaml |

安装Frontend Service

1 | ~$ kubectl apply -f frontend-service.yaml |

错误处理

连接证书错误

1 | ~$ kubectl get node |

- 上述错误,一般是在

kubeadm reset后,没有更新~/.kube/config的文件发生.cp /etc/kubernetes/admin.conf ~/.kube/conf就可以解决.

加入节点错误

1 | ~$ sudo kubeadm join 172.18.127.186:6443 --token z8r97j.3ovdfddb6df9lnq7 --discovery-token-ca-cert-hash sha256:07767a67fa6c38feda7471ee5e1a15a0a9c417cfdf6cf457ff577297f22d9415 |

- 如果加入节点时间很长,且最后还出错了,加参数

-v=6,就会出现如下错误:1

2

3

4

5

6

7

8~$ sudo kubeadm join 172.18.127.186:6443 --token z8r97j.3ovdfddb6df9lnq7 --discovery-token-ca-cert-hash sha256:07767a67fa6c38feda7471ee5e1a15a0a9c417cfdf6cf457ff577297f22d9415 -v=6

[...]

I0414 16:23:00.398178 13202 token.go:200] [discovery] Trying to connect to API Server "172.18.127.186:6443"

I0414 16:23:00.398724 13202 token.go:75] [discovery] Created cluster-info discovery client, requesting info from "https://172.18.127.186:6443"

I0414 16:23:00.402234 13202 round_trippers.go:438] GET https://172.18.127.186:6443/api/v1/namespaces/kube-public/configmaps/cluster-info 200 OK in 3 milliseconds

I0414 16:23:00.402426 13202 token.go:203] [discovery] Failed to connect to API Server "172.18.127.186:6443": token id "z8r97j" is invalid for this cluster or it has expired. Use "kubeadm token create" on the control-plane node to create a new valid token

[...] - 如果出现上述错误,在Master节点输入下面命令,用它输出的

kubeadm join参数,在需要添加的节点为上运行.1

2

3~$ kubeadm token create --print-join-command

# 使用下述输出,重新添加.

kubeadm join 172.18.127.186:6443 --token in1l6v.ue78pr5vvr55qcad --discovery-token-ca-cert-hash sha256:a1f80db7a76e214dd529fc2aed660d71428994d9104c1b320bf5abb6cda4b165

安装charts错误

1 | ~$ helm install stable/minio |

- 第一步,更新一下仓库

1

2

3

4

5

6

7

8

9

10~$ helm init --upgrade

$HELM_HOME has been configured at /home/lcy/.helm.

Tiller (the Helm server-side component) has been upgraded to the current version.

Happy Helming!

~$ helm repo list

NAME URL

stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

local http://127.0.0.1:8879/charts - 查看

k8s的子系统Pod的状态,查看tiller-deploy部分.1

2

3

4

5

6~$ kubectl -n kube-system get po

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5cbcccc885-krbzj 1/1 Running 0 17h

[]...]

kube-scheduler-k8s-master 1/1 Running 0 18h

tiller-deploy-c48485567-m7kj2 0/1 ErrImagePull 0 2m50s - 根据上述输出,

tiller-deploy拉取镜像失败,没有运行起,下面再查看详情.

1 | ~$ kubectl describe pod tiller-deploy-c48485567-m7kj2 -n kube-system |

- 因为使用要

gcr.io的仓库造成拉取失败,下面通过docker search tiller | grep "Mirror"选取一个,再通过下面命令修改它.1

2~$ kubectl edit deploy tiller-deploy -n kube-system

[....] - 将上述中的

image gcr.io/kubernetes-helm/tiller:v2.13.1替换成image: sapcc/tiller:v2.13.1,下面再运行就会显示tiller成功运行.

1 | ~$ kubectl get pod -n kube-system | grep "tiller" |

Error: no available release name found

1 | ~$ kubectl create serviceaccount --namespace kube-system tiller |

kubeadm初始化错误

1 | ~$ sudo kubeadm init --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.14.1 --pod-network-cidr=10.244.0.0/16 |

ERROR NumCPU加入运行参数--ignore-preflight-errors=NumCPU就变成警告了.FileContent--proc-sys-net-bridge-bridge-nf-call-iptables错误处理如下:1

2

3

4

5

6

7

8

9

10

11

12~$ apt-get install bridge-utils

# 有可能要重启

~$ modprobe bridge

~$ modprobe br_netfilter

~$ cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

EOF

~$ sysctl --systemkube-proxy与iptables的问题1

2~$ kubectl -n kube-system logs kube-proxy-xxx

W0514 00:21:27.445425 1 server_others.go:267] Flag proxy-mode="" unknown, assuming iptables proxy

1 | cat mysql-pass.yaml |

谢谢支持

- 微信二维码: